Introduction

This is a follow-up post to our original publication: How to compile and distribute your iOS SDK as a pre-compiled xcframework.

In this technical article we go into the depths of best practices around

- How to automate the deployment of different variants of your SDK to provide a fully customized, white-glove service for your customers

- How this approach allows your SDK to work offline out-of-the-box right from the first app start

Build Automation

For everyone who knows me, I love automating iOS app-development processes. Having built fastlane, I learned just how much time you can save, and most importantly: prevent human errors from happening. With ContextSDK, we have fully automated the release process.

For example, you need to properly update the version number across many points: your 2 podspec files, your URLs, adding git tags, updating the docs, etc.

Custom binaries for each Customer

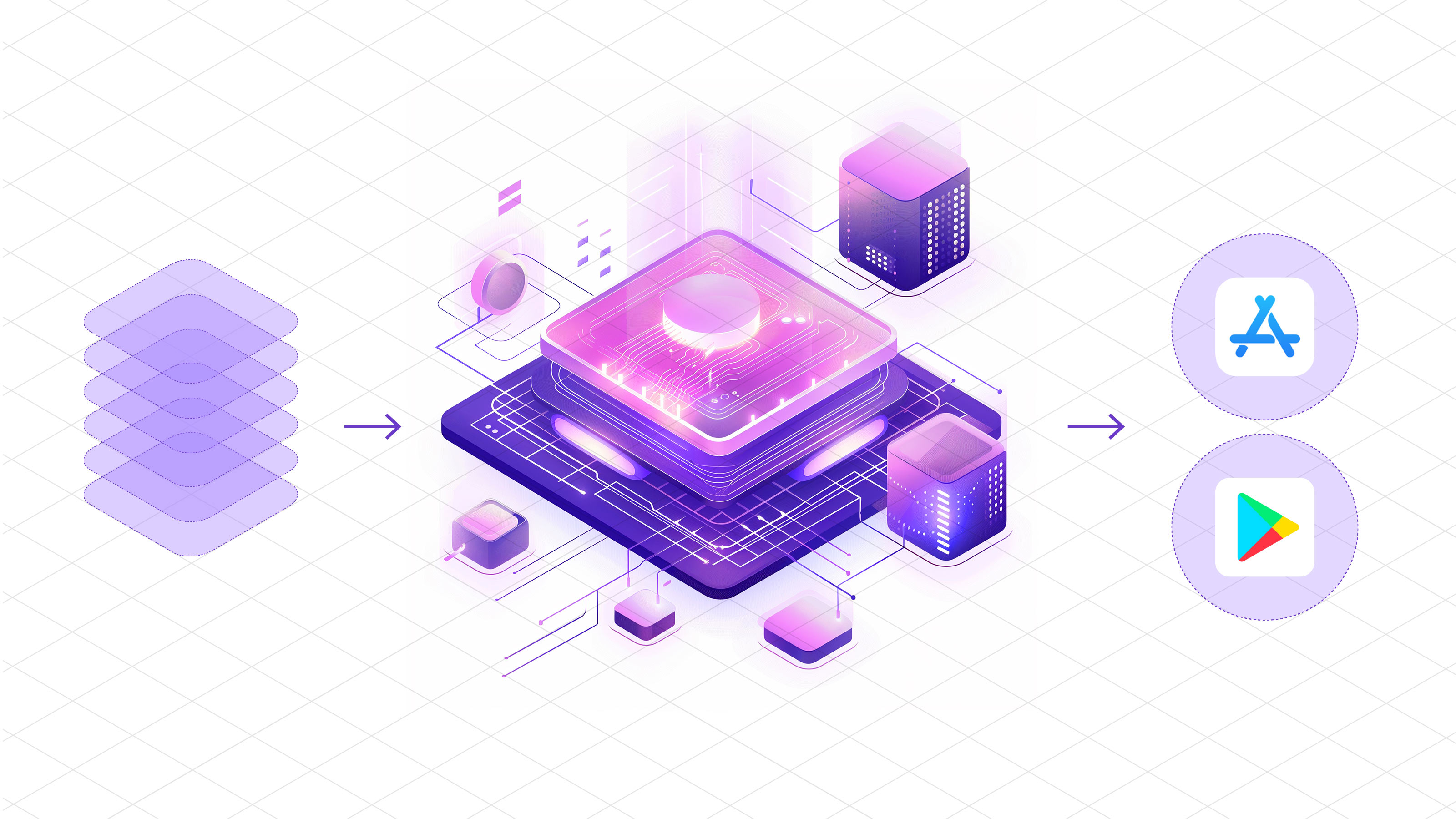

With ContextSDK, we train and deploy custom machine learning models for every one of our customers. The easiest way most companies would solve this is by sending a network request the first time the app is launched, to download the latest custom model for that particular app. However, we believe in fast & robust on-device Machine Learning Model execution that doesn’t rely on an active internet connection. In particular, many major use-cases of ContextSDK rely on reacting to the user’s context within 2 seconds after the app is first launched, to immediately optimize the onboarding flow, permission prompts, and other aspects of your app.

We needed a way to distribute each customer’s custom model with the ContextSDK binary, without including any models from other customers. To do this, we fully automated the deployment of custom SDK binaries, each including the exact custom model and features the customer needs.

Our customer management system provides the list of custom SDKs to build, tied together with the details of the custom models:

Our deployment scripts will then iterate over each app, and include all custom models for the given app. You can inject custom classes and custom code before each build through multiple approaches. One approach we took to include custom models dynamically depending on the app is to update our internal podspec to dynamically add files:

In the above example you can see how we leverage a simple environment variable to tell CocoaPods which custom model files to include.

Thanks to iOS projects being compiled, we can guarantee the integrity of the codebase itself. Additionally, we have hundreds of automated tests (and manual tests) to guarantee alignment of the custom models, matching SDK versions, model versions, and each customer’s integration in a separate, auto-generated Xcode project.

Side-note: ContextSDK also supports over-the-air updates of new CoreML files, to update the ones we bundle the app with. This allows us to continuously improve our machine learning models over time, as we calibrate our context signals to each individual app. Under the hood we deploy new challenger models to a subset of users, for which we compare the performance and gradually roll them out more if it matches expectations.

Conclusion

Building and distributing a custom binary for each customer is easier than you may expect. Once your SDK deployment is automated, taking the extra step to build custom binaries isn’t as complex as you may think.

Having this architecture allows us to iterate and move quickly while having a very robust development and deployment pipeline. Additionally, once we segment our paid features for ContextSDK more, we can automatically only include the subset of functionality each customer wants enabled. For example, we recently launched AppTrackingTransparency.ai, where a customer may only want to use the ATT-related features of ContextSDK, instead of using it to optimize their in-app conversions.

ContextSDK, a leading Edge AI startup, offers two products to enhance mobile apps based on real-world context: ContextDecision for optimizing in-app upgrade prompts, onboarding flows, and increasing engagement, and ContextPush for sending push notifications at the perfect moment, achieving the highest open rates and lowest opt-out rates.

This is one of many upcoming technical blog posts from ContextSDK, you can subscribe to our newsletter to stay in the loop, or follow us on X and LinkedIn.