.png)

Introduction

In the world of app development, understanding user context is pivotal. At ContextSDK, a leading on-device AI startup, we offer an iOS SDK that does just that. This blog post dives into the backbone of our operations: the backend infrastructure that efficiently manages 40 million context events every day.

Our Backend Infrastructure: A Deep Dive

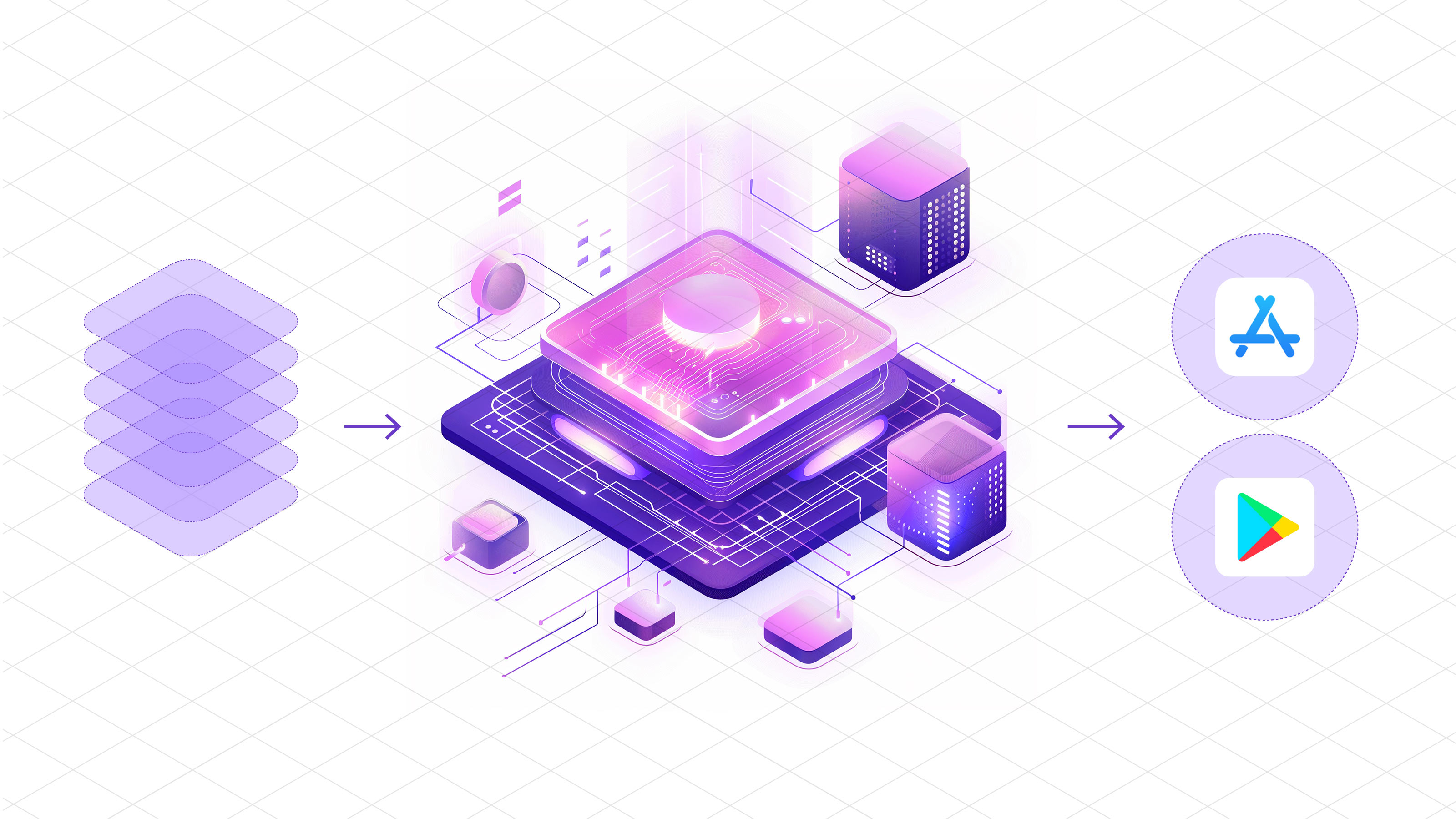

The journey of an event, from its origin to its final destination, is a multi-staged process, each important for maintaining the integrity and usability of the data we collect.

Event Collection: It all begins on the user's iOS device. Here we collect over 170 unique signals which not only include basics such as the time, or battery level, but also advanced signals such as the accelerometer and gyroscope motion data. These signals are collected multiple times during each usage session. Our SDK batches all events from a single session and sends them in one consolidated request. This approach not only streamlines data transfer but also minimizes network load.

Initial Processing - The Ingest Server: The batches sent by iOS are received by the "Ingest" server. Built on NodeJS, this server is the gatekeeper, ensuring that only correctly formatted data proceeds, and that only data from authorized clients is accepted. It accumulates these requests into even larger batches, optimizing for the subsequent stages. Crucially, it also plays a role in data integrity – if the iOS client does not receive a 200 response, the data persists on the device for retransmission, preventing data loss during outages.

This means that no matter what happens, for example an outage of GCP, or our own systems being overloaded, the chances for data loss are minimal, as the copy on the user's device is only ever cleared once it was positively acknowledged as having been ingested by our system. All our infrastructure is set up to provide back pressure upstream (for example RabbitMQ has setup limits on queue size), so even if the last service in the chain (for example Clickhouse) is overloaded, eventually clients would start to get 4XX responses and would resend their data as soon as we have managed the load on our end again.

Queuing and Routing - RabbitMQ: RabbitMQ acts as a central hub routing between all our backend services. After initial parsing and validation by the "Ingest" server, the data enters our RabbitMQ system. From here the data is routed to two services “Schupferl” and “Activity Tagger”.

Data Storage - Schupferl: Here, "Schupferl," (Austrian word for throwing) another NodeJS application, takes center stage. It prepares the events for storage in our Clickhouse database. Its strategy of batching events into large groups aligns with Clickhouse’s preference for fewer, larger inserts, enhancing storage efficiency.

Data Enrichment - Activity Tagger: Simultaneously, the "Activity Tagger" comes into play. This Python-based application uses ML to transform accelerometer data into meaningful insights, categorizing them into activities like walking, standing, or being active and on-the-go. These enriched events are then routed back to "Schupferl" for insertion into Clickhouse. Having this step separately from inserting the raw data also allows us to re-run the tagging any time we improve our activity recognition machine learning models.

Analysis and Application - Clickhouse: The final piece of our infrastructure is responsible for leveraging this vast repository of context events, currently holding more than 3 Billion events, each containing the 170 context signals. Using Clickhouse, the data is ready for real-time analysis and model building, directly feeding into the enhancement of our iOS SDK.

Our ContextPush product offers a new way to look at your app usage data, by harnessing context and sending notifications at optimal times, ensuring high engagement without GPS dependency.

Conclusion

At ContextSDK, our robust and scalable backend infrastructure is the center of our service, enabling us to effortlessly handle over 40 million events daily, easily scaling up to 10x or 100x the current workload. This system not only ensures data integrity and operational efficiency but also keeps our infrastructure costs in check. It also allows us to apply real-time processing of events at scale simply by routing the events to new targets using our message broker.