Introduction

As technology evolves, understanding how users interact with their devices in real-world scenarios has become increasingly important. With the introduction of Apple's Vision Pro headset, developers now have a unique opportunity to delve deeper into user behavior. In this blog post, we explore how ContextSDK seamlessly integrates with Vision Pro, offering developers valuable insights into user contexts. By leveraging accelerometer data and machine learning, ContextSDK empowers developers to create more personalized experiences tailored to users' everyday lives, whether they're on the move or relaxing during a quiet evening.

Context + Vision Pro

ContextSDK is the best solution to better understand in which real-world context the user is currently in, like if the user is engaged in a busy environment or enjoying a calm moment at home. Those are vastly different situations a user may use your app in.

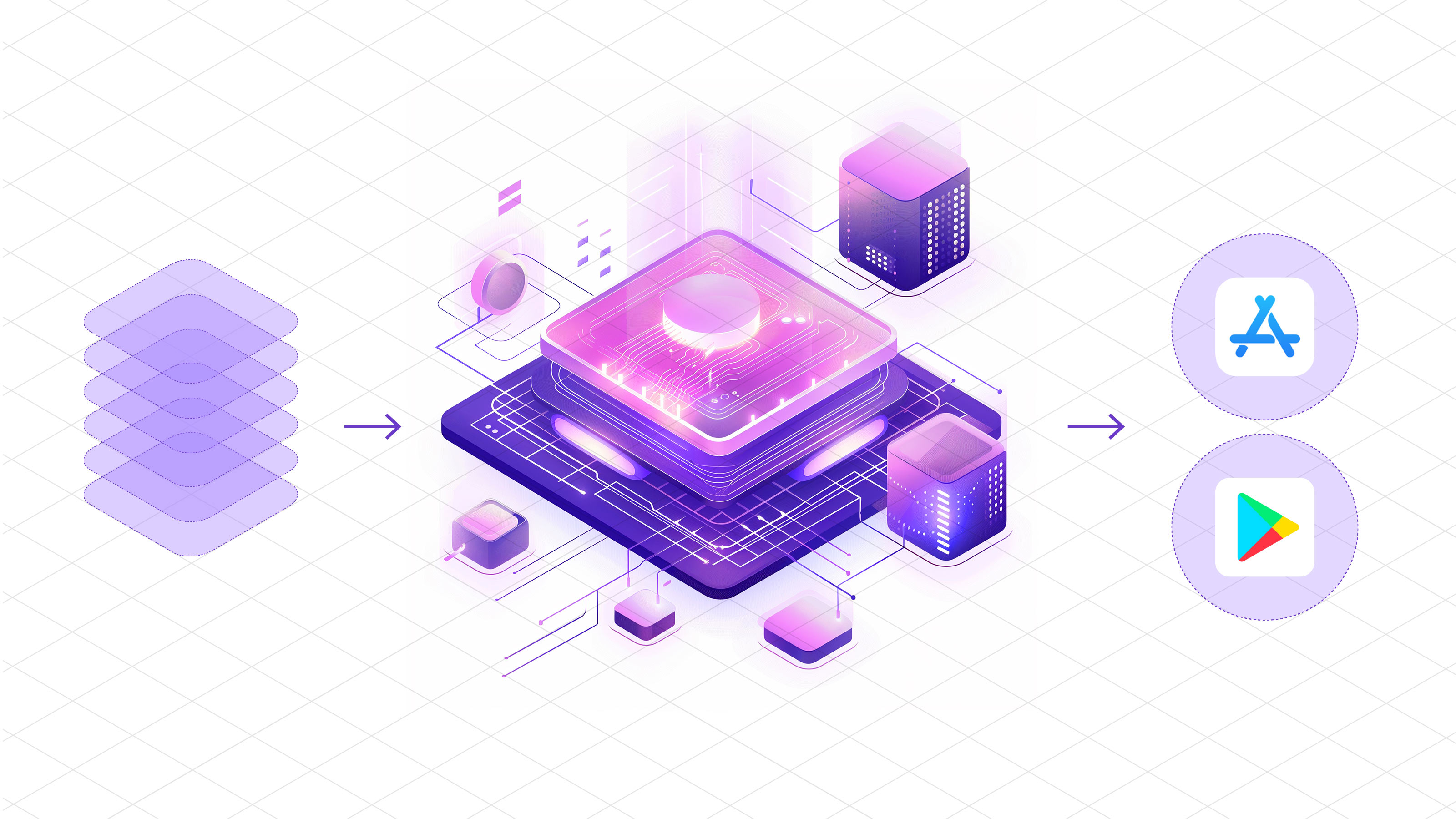

With Vision Pro, there isn’t a lot of data yet on where most people are using their devices. However, we at ContextSDK are ready to leverage it: Using the built-in accelerometer sensor, we’re able to infer the same type of insights we already do for hundreds of millions of iPhones, but for the new Vision Pro headset. By executing machine learning models on-device, we’re able to get instant feedback and predictions, with no dependency on any external infrastructure, while also allowing us to train and deploy new CoreML models Over-The-Air over time.

ContextSDK will support visionOS for all apps that have an open immersive space, as per Apple’s docs:

In visionOS, accelerometer data is available only when your app has an open immersive space. For more information, see ImmersiveSpace.

To use it, you can use our easy integration - the code sample below shows how you use ContextSDK to optimize your conversion rate for in-app purchases, but you can use it for any kind of flow where you have a desired outcome

.png)

ContextSDK can distinguish between all major user activities, from walking, sitting, standing, lying in bed, to driving (hopefully as a passenger), taking transit, to detecting if you’re in an airplane.

We’ve built a demo app showing the current real-world context of the user. You can see me using the Vision Pro while sitting, and choosing “Get current Context”, which then renders “Sitting”. Once I got up, and used the same button, the context switched to “Standing”. It may be a little hard to read in the video.

We want to thank Lukas Gehrer from Appful.io for enabling us to test our prototype with a freshly imported Apple Vision Pro here in Vienna.

Conclusion

All in all, we aim to be an essential part of the visionOS developer ecosystem, leveraging the user’s real-life context, which only increases in importance, as users expect their devices to better understand their behavior, goals, and preferences. By tapping into the depths of Vision Pro's capabilities, we're gaining a richer understanding of how users interact with technology in their daily lives. As a leading on-device AI startup, ContextSDK offers developers a powerful tool to create apps that feel intuitive and responsive, whether users are out and about or enjoying a relaxed moment at home.